What is DevOps?

DevOps is a set of software development practices that combines software development (Dev) and information technology operations (Ops) to shorten the systems development life cycle while delivering features, fixes, and updates frequently in close alignment with business objectives.

Invesco Overview

Invesco is an independent investment management firm which helps investors across the globe. They offer a wide range of single-country, regional and global capabilities across major equity, fixed income and alternative asset classes, delivered through a diverse set of investment vehicles. The need to analyze markets needing high quality results with specialized insight and disciplined oversight. They manage more than $954.8 billion in assets on behalf of clients worldwide (as of March 31, 2019).

The Project So Far

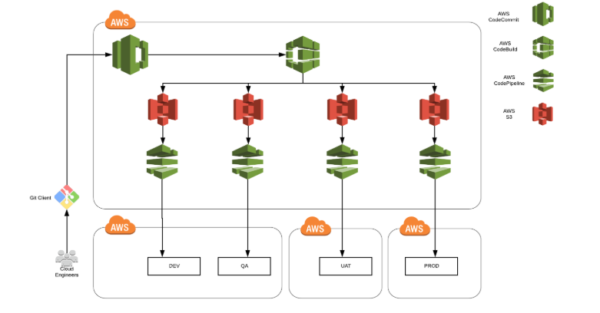

Northbay team proposed the following architecture of the data lake. It heavily relies on AWS platform.

DevOps is implemented in Invesco in following ways:

- Infrastructure provisioning through CloudFormation

- Continuous Integration and Continuous Deployment

- Shared Service Account Model

- Automation Testing

Infrastructure Provisioning through CloudFormation

We model and automate infrastructure resources for all environments (DEV,, QA, UAT, PROD) in an automated and secure manner by using Cloudformation. Infrastructure covers the following resources:

- Lambdas

- Cloudwatch Alarms and Events

- API Gateway

- Codebuild projects

- Codepipeline

- Cloudwatch Alarms

- State Machines

- IAM Service Roles and Policies

- S3 Buckets

- SNS Topics

- RDS

- Elastic Search

- SSM Parameters

- AWS Batch

- ECR Repository

Continuous Integration and Continuous Deployment

CodePipeline

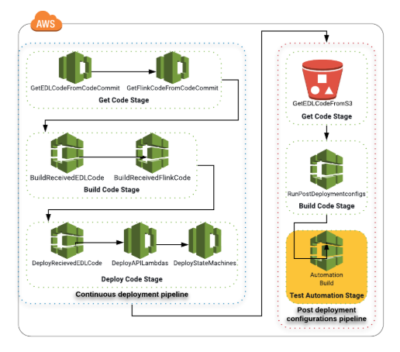

We have used CodePipeline to automate steps in software delivery process by building, testing and deploying code every time there is a code change. There are two types of pipelines:

- CI/CD Pipeline for continuous integration and deployment of python and java code. It gets code from CodeCommit, builds using CodeBuild project and deploy lambda, state machines and cloudwatch alarms using Cloudformation stacks in CodePipeline.

- Post Deployment Pipeline for executing pipeline after deployment. It gets code from S3, builds it using CodeBuild project and perform automation testing on it

CodeBuild

We have used CodeBuild projects for:

- Building base infrastructure code for all environments

- QA Automation Testing

- Performing post deployment configurations

- Schema migration

- Updating branch in codecommit

- Deleting athena temp tables

- Restoring code and creating code backup

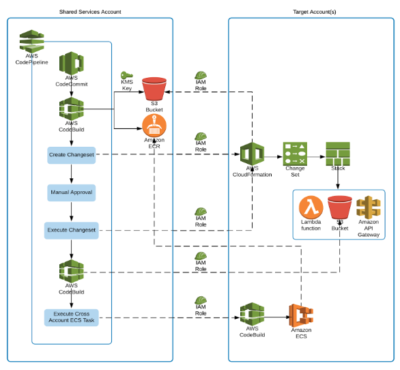

Shared Service Account Model

Shared service account model is implemented in invesco. In shared service account, resources are shared in one account with users in a different account. CodeCommit repository, shared service stacks and pipelines are placed in one shared account and resources of other accounts (DEV, QA, UAT, PROD) access those through cross account access.

With shared code repository, we have avoided overhead of synchronizing and managing multiple copies of code. When there is a commit by developer in CodeCommit repository, pipeline runs, builds code using CodeBuild and deploys it using cloudFormation in its respective account/environment.

When there is deployment in PROD environment, pipeline in shared account gets code from CodeCommit repository against specific commit ID and updates base infrastructure stack, IAM roles stack and Pipelines of all other accounts. Thus, eliminating the overhead of having pipeline in each account to update its stacks on deployment. Shared Pipeline does so for each account.

The shared services account approach not only helps with easy maintenance of these shared services like codeCommit but also helps in central maintenance of user and permissions. We can easily who has access to which environments and which services. E.g We tightly control access to PROD environment to limited people and even the deployment can be done by that limited group.

Automation Testing

We have automated integration testing. The automation testing framework developed runs as part of the post deployment pipeline and requires a manual approval. It is not run for each deployment and on each environment. On higher environment like UAT and Prod it runs after each deployment after manual approval step to ensure the sanity of the deployment release. It serves two purposes:

- Process Validation: It includes execution of state machines, lambdas and validating their execution results

- Data Validation: End to end validation of data from source to sink

About NorthBay

We are a fast-growing, 100% AWS focused onshore/offshore AWS Premier Consulting Partner, supporting our customers to accelerate the reinvention of their applications and data for a Cloud-native world. Our >350 AWS Certified Employees excel in developing and deploying Database & Application Migrations, Data Lakes and Analytics, Machine Learning/AI, DevOps and Application and Data Modernization/Development that drive measurable business impact.